News

Optimizing Agri Production yield using numerical models and massive historic data

1. Summary

1.1. Scope of pilot

A main problem that is challenging today is anticipation of yields potentials to make better agri-food decisions. In this scope, CybeleTech developed automated tools to identify crop lands in a region from satellite images and then to predict crop growth for accurate in-season forecast of the total agricultural production in one region, e.g. Europe or the Corn Belt. Currently, we are faced with the problem of storing data from the Sentinel-1, Sentinel-2 and ERA5 satellites. We do not have the computational capacity to execute our codes. These two phases of agricultural production forecast offer significant challenges for data processing, as follows:

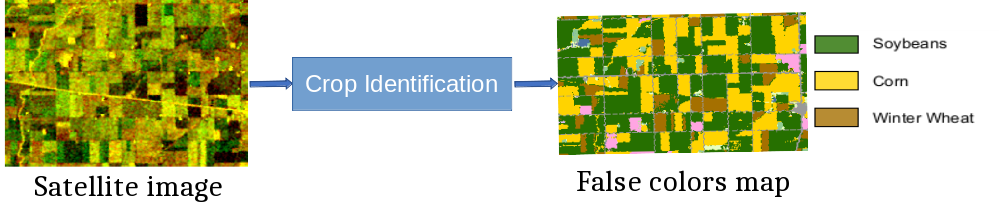

Phase 1: Crop identification via processing of satellite images

This phase identifies the type of crop on soil based on remote sensing data. It is divided into two different stages, namely the learning stage and the forecast stage. During the learning phase, the model, based on CNNs, is trained using series of satellite images. We have worked extensively on supervised learning by comparing outputs with real datasets but has also experimented with unsupervised learning. During the forecast stage, the trained model is used on every pixel of a large region (covering multiple tiles of satellite images). This stage is (schematically) represented by the sequence of operations: read pixel data from storage, compute model from data, write result to storage. In the forecast stage, computations are spatially independent so this step can run in parallel for every pixel, imposing a large load to the storage system.

Phase 2: Data assimilation in plant growth

In the second phase, plant growth models are used to make numerical simulations of plant development in the field during the growing season. A state of the soil-plant-atmosphere system is simulated day by day, taking into account soil composition and climate data. The state of the system is described by plant variables, such as biomass of different organs or leaf area coverage, soil status with e.g. water content in different sublayers and so on. Although this is an exciting approach that opens up new possibilities, plant growth models have margin errors because:

- The entire crop functioning is not described in the model processes; for instance, the model may not simulate pest disasters.

- The mechanistic processes included in the model are never fully accurately described because we are trying to model a complex living system (there is no equivalent of Navier-Stokes equations for plant growth…).

To correct these errors, we use data assimilation methods, an approach similar to Kalman filtering for linear systems, thanks to data for plant development coming from remote field sensors, acquired during the growing season. Model simulations are corrected online during the season using such data. Since we are not dealing with a simple linear model and we do not particularly expect errors to be Gaussian, we use methods that are numerically more complex than Kalman filters. The method is based on a Bayesian framework where a lot of trial simulations are produced for a different set of model parameters to identify a better representation of reality. With this method, large numbers of particles have to be generated to get a correct representation of the actual probability distribution. We have never been able to test this approach at large scale before because of the required number of particles. This is an HPC/BD problem that can benefit from fast storage to process states of the particles as sub-sets. At each step, the amount of data that would be read/written/processed are at least a few TBytes to achieve good results. In addition, several instances of this (data assimilation) phase may run concurrently because data are spatialized (satellite image), with one instance corresponding to one pixel.

1.2. Summary of developments

The first stages of pilot development, up to M12, focused on the first part of the workflow, crop recognition. This part is deployed as a Docker container, following the general approach of the EVOLVE platform. Then, the part on the Simulation was deployed as a Docker container in M15. The two containers communicate with each other. The first step uses the Tensorfow and Cuda library. The second step uses MPI. Then we improved the way of retrieving and storing images from Sentinel-1 and Sentinel-2. The metadata are better managed in order to accelerate the response time of the different requests.

2. Pilot developments and achievements so far

2.1. Crop Identification step

The first step, cultural recognition uses Tensorflow and Cuda libraries.

For this step, we use the GPU resources available on the HPC. These data are provided by the Copernicus program. The algorithms developed make it possible to identify the crops present on the input images (wheat, maize, rapeseed, barley). The output is a map in false colors representing different crops.

Figure 1. First part of the proposed workflow

This part allowed us:

- develop a script to download the Satellite images on the HPC.

- to set up at Cybeletech a registery to handle Docker images with ease.

- to use CUDA technology in a Docker.

To run the program, we set up a JSON file that indicates the name of the Satellite images used as input and the folder where the result will be saved.

2.3. Simulation step

Once the cultural variety has been determined at a geographical point, we select the appropriate growth model to estimate the yield of the field.

This process is :

- Take all datas (images)

- Run the MPI container by "docker-compose up -d" (The docker image is mpi_cysim:dev)

- Run the code by "docker exec -it cybele-mpi-head bash /cybeletech/run_scripts.sh ITER NB_NODES" with ITER = iteration number (default = 10) & NB_NODES = number of MPI node (default = 2)

- NB_NODES max = 5. Modify docker-compose.yml to add more nodes.

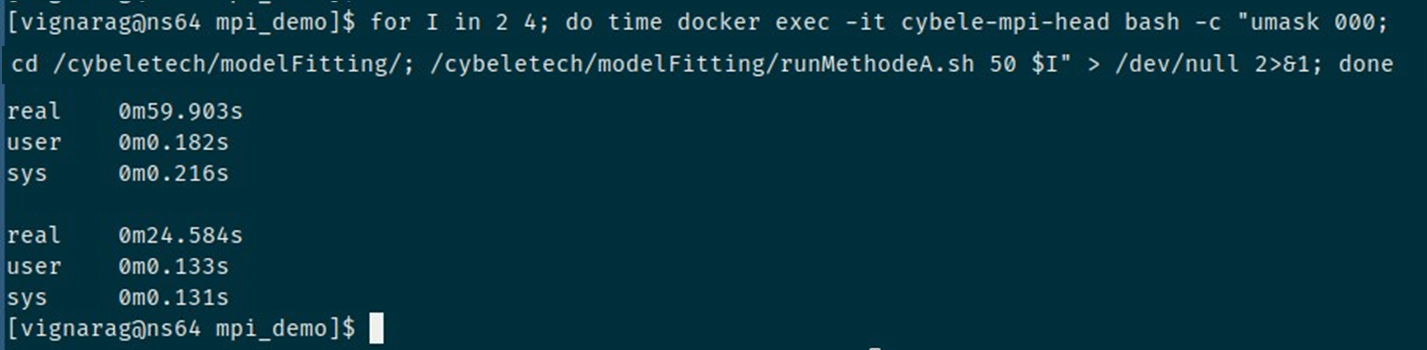

Figure 2: terminal output from workflow

In the example in Figure 2, the workflow is launched with 2 then 3 MPI nodes. It is noted that the computation time decreases when the number of nodes is increased. It is interesting to launch several containers when the application allows it.

3. Challenges and next steps

3.1. Challenges

A big challenge is to be able to train the CNNs over a large geographic region. Currently, the geographic area for learning is 20x20 km. The learning process requires a 40 GB RAM resource. We hope to be able to increase the learning area.

Another challenge is to find the best method to optimize the calculation time of the different parameters in the Simulation step.

3.2. Next steps

Automatize the data flow between the different Docker containers.