News

Deterministic Application Execution for Workloads with Varying User Input on Highly Utilized Clusters

Cloud computing has been gaining world-wide attention, because of the flexibility and accessibility it provides. However, despite the potential for scalability in the usage of computing resources, a closer study of workload traces from major cloud providers, such as Google, Microsoft, and Alibaba, reveals that a significant portion of the reserved resources are not utilized. The main reason is that users tend to ask for more resources than necessary, even at higher monetary cost, to ensure that their applications operate according to their requirements. Hence, a significant amount of cloud resources is not used reducing datacenter efficiency. In this work, we propose an adaptive system that efficiently manages cloud resources to increase server use. Our system automatically distributes and adapts resources allocated to applications to maintain service level objectives (SLOs) on user-specified metrics and satisfy their requirements. We employ a Proportional Integral Derivative (PID) controller as resource estimator and a custom-designed resource assigner that packs incoming applications to as few servers as possible.

Current trends indicate that the demand for cloud resources has been increasing rapidly and is projected to increase even more in the future [1]. For this reason, providers are pressed to expand the compute capacity of their infrastructure. Current resource management systems tend to waste a large percentage of resources, e.g. at least 50% of the reserved resources are not utilized in most major cloud providers. Therefore, it is important to focus on minimizing resource under-utilization of existing clusters to increase datacenter compute capacity, instead of adding new servers.

Currently, a widely adopted approach for reserving resources is that users themselves allocate resources for their applications. This approach is not problematic per se, however, because users are concerned about quality of service in their applications, they usually allocate resources conservatively and based on experience, times often considering the worst or a bad case. Furthermore, they allocate resources only once, when the application is submitted to the datacenter, and rarely change allocation afterwards. Therefore, applications typically get more resources than what they actually require, resulting in underutilized servers.

This problem is exacerbated for cloud-based services with a large duration, because the incoming demand does not remain constant throughout their whole execution time; therefore, their requirements for resources need to change over time as well. An alternative approach towards proper allocation is to allow users to define the desired level of performance for their application and have an automated system take care of resource allocation. This is more intuitive for users and hence, less prone to mistakes.

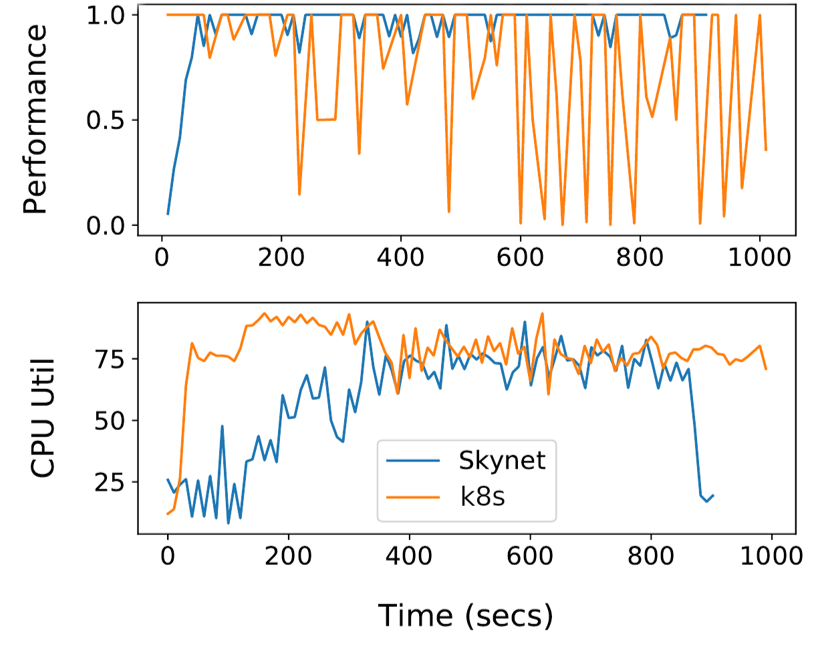

Figure 1. In this experiment we show the benefits our approach (Skynet) compared to the unmodified kubernetes on a cluster with five servers (80 cores, 160 threads), in presence of high load (approximately 80% utilization for all machines).

Our goal is to move the burden of defining resource allocations for applications from the user to the system, to ensure deterministic execution of applications, and to keep the servers highly utilized. The main challenges we need to address for this purpose are:

- Estimate resource allocations that achieve the desired application performance.

- Handle the phases of long-running applications.

- Handle varying user input to applications during execution.

- Place applications to the underlying infrastructure efficiently.

Previous work employs various techniques to address some the challenges described above, such as application performance profiling to manage resource allocation [2], machine learning to manage application placement [3], partitioning the infrastructure [4] to handle long-running applications. We design and implement a resource management system for clusters that maintains SLOs of applications to satisfy user requirements. Our system monitors a user-defined metric for each application, adjusts resource allocation to keep these metrics above a defined level, and achieves high cluster utilization by consolidating as many applications as possible in each server.

We implement our work as a custom scheduler in kubernetes, a widely adopted system for automating deployment, scaling, and management of containerized applications. We evaluate and compare our system to the unmodified kubernetes in our local 5-server cluster (Figure 1). In this experiment, we deploy many applications in the cluster which result in high load for all servers. We show two time-series, in the top graph the y axis is the average performance of all applications in the cluster and the bottom graph shows as the y axis the average CPU utilization of all servers in the cluster. A performance level of 1 means that the application achieves the SLO, otherwise it indicates how close to the SLO the application performs. Our preliminary results show that our approach reduces SLO violations by approximately 5x (11% our system vs. 53% kubernetes) with comparable cluster utilization.

References

[1] Cisco. 2018. Cisco Global Cloud Index: Forecast and Methodology, 2016–2021 White Paper.

https://www.cisco.com/c/en/us/solutions/collateral/service-provider/global-cloud-index-gci/white-paper-c11-738085.html.

[2] Yannis Sfakianakis, Christos Kozanitis, Christos Kozyrakis, and Angelos Bilas.2018. QuMan: Profile-based Improvement of Cluster Utilization.ACM Transac-tions on Architecture and Code Optimization (TACO)15, 3 (2018), 27.

[3] Christina Delimitrou and Christos Kozyrakis. 2014. Quasar: Resource-efficient and QoS-aware Cluster Management. In Proceedings of the 19th International Conference on Architectural Support for Programming Languages and Operating Systems (ASPLOS’14). ACM, New York, NY, USA, 127–144.

[4] P. Garefalakis, K. Karanasos, P. Pietzuch, A. Suresh, and S. Rao. Medea: Scheduling of long running applications in shared production clusters. In Proceedings of the 13th European Conference on Computer Systems, EuroSys’18, pages 4:1–4:13, 2018.