News

TReM: Improving QoS in Multi-GPU Servers with Task Revocation

GPUs are widely used in datacenters and cloud environments because they lead to higher compute density for latency sensitive applications. Today, GPUs are mainly offered in a dedicated manner to applications, which leads to accelerator under-utilization. Previous work has focused on increasing utilization by sharing accelerators across batch and user-facing tasks. However, these studies have used favorably small batch tasks, with execution time similar to the SLA of user-facing tasks. With the presence of long running tasks scheduling approaches without a GPU preemption mechanism fail to meet response time requirements of user-facing tasks. Existing approaches that support preemption in modern GPUs are either too slow, in the order of several hundreds of milliseconds or even seconds, or too restrictive requiring the kernel source code or depending on the thread-block size. As a result, they prevent mixing long running batch tasks with user facing ones. In our work we design TReM, a revocation mechanism that provides constant and low kernel revocation latency for GPUs.

Heterogeneous datacenters deploy accelerators, typically GPUs and FPGAs, to process more data within the same power budget. Although resources in cloud environments are typically shared, most cloud providers offer accelerators in a dedicated manner. This exclusive assignment improves Quality of Service (QoS) at the cost of accelerator under-utilization, because a single task is not always capable to fully utilize the accelerator.

Recently, research [1] and industry [2] have worked towards increasing utilization by sharing GPUs across userfacing and batch processing tasks. User-facing tasks require tail latency guarantees based on a Service Level Agreement (SLA). The execution time of user-facing tasks, e.g inference in machine learning models, ranges from several microseconds up to a few hundreds of milliseconds. In contrast, batch applications do not have strict response-time requirements per task and are latency tolerant.

Previous SLA-oriented scheduling policies for GPUs [1] ensure that more than 95% of user-facing tasks meet their SLA. However, these studies only consider batch tasks with execution times similar to the SLA (Figure 1(a)). Under this assumption, one can afford to wait for a running batch task to finish before launching new user-facing tasks. However, batch tasks can have execution time orders of magnitude longer than user-facing tasks. Current SLA-oriented approaches cannot provide QoS to user-facing tasks, when sharing a GPU with long running batch tasks (Figure 1(b)).

In our work, we consider the problem of sharing GPU among user-facing and batch tasks with execution time ranging up to several minutes. To guarantee that user-facing tasks will meet their SLA target, we need a mechanism that preempts the running batch task [3]–[5].

![Figure1 Scenarios of user-facing and batch tasks time sharing a GPU. If a batch task is executed when a user-facing task arrives, priority inversion occurs. (a) User-facing tasks will not miss their SLA, if the duration of the batch task (bd) is considerably smaller than the SLA [1]. (b) Otherwise the same scenario can result in SLA violations with the existing approaches. (c) Effectively, we need a low-latency mechanism to preempt or revoke the batch task. (d): The timing of TReM.](/imagem/graph2.png)

Figure1: Scenarios of user-facing and batch tasks time sharing a GPU. If a batch task is executed when a user-facing task arrives, priority inversion occurs. (a) User-facing tasks will not miss their SLA, if the duration of the batch task (bd) is considerably smaller than the SLA [1]. (b) Otherwise the same scenario can result in SLA violations with the existing approaches. (c) Effectively, we need a low-latency mechanism to preempt or revoke the batch task. (d): The timing of TReM.

Previous work has addressed the problem of long running batch tasks using GPU preemption, as depicted in Figure 1(c). With GPU preemption, the currently executing task is stopped and its state is stored either (1) in the GPU or (2) in the host memory. The first case, as in Pascal’s preemption [3] or FLEP [4], has the drawback that data of preempted tasks can fill GPU memory, which is a scarce resource in data-intensive workloads. On the other hand, approaches that move task data to host memory [5] introduce variable preemption overheads.

Moreover, approaches as NVIDIA Pascal preemption and FLEP that slice the CUDA kernel either in block level or instruction level cannot provide a constant and low preemption latency because their overhead depends on thread block size or on subtasks granularity. Without providing a bounded preemption latency we could not ensure SLA for user-facing tasks. Finally, approaches as [4] require task source code which is not always available.

We design and implement TReM, a task revocation mechanism that preempts a task, by aborting its currently executing kernel without saving any state and replays it later. In particular TReM spawns the actual kernel from inside a wrapper kernel, using CUDA Dynamic parallelism. The wrapper kernel polls a variable allocated in the GPU, if this variable is set to true the wrapper kernel aborts its execution, hence, the actual kernel exits also. Figure 1(d) presents the latency of TReM.

TReM has the following benefits:

- It revokes a running kernel at any arbitrary point of its execution, providing bounded latency overhead.

- It does not require the source code of the kernel or an offline phase for re-compilation.

- It prevents GPU memory monopolization from previously killed tasks with large memory footprint.

- It is agnostic to the CUDA GPU architecture.

TReM when to revoke batch tasks. We implement two scheduling policies, Priority and Elastic, that differ in systems with multiple GPUs. Priority tries to allocate a GPU for every user-facing task. As a result, Priority+TReM may revoke as many batch tasks as the number of newly arrived user-facing tasks. Elastic dynamically computes a minimum number of accelerators needed to sustain the SLA and devotes the remaining accelerators to batch tasks. Effectively, Elastic+TReM results in fewer revocations i.e., less work to be re-executed.

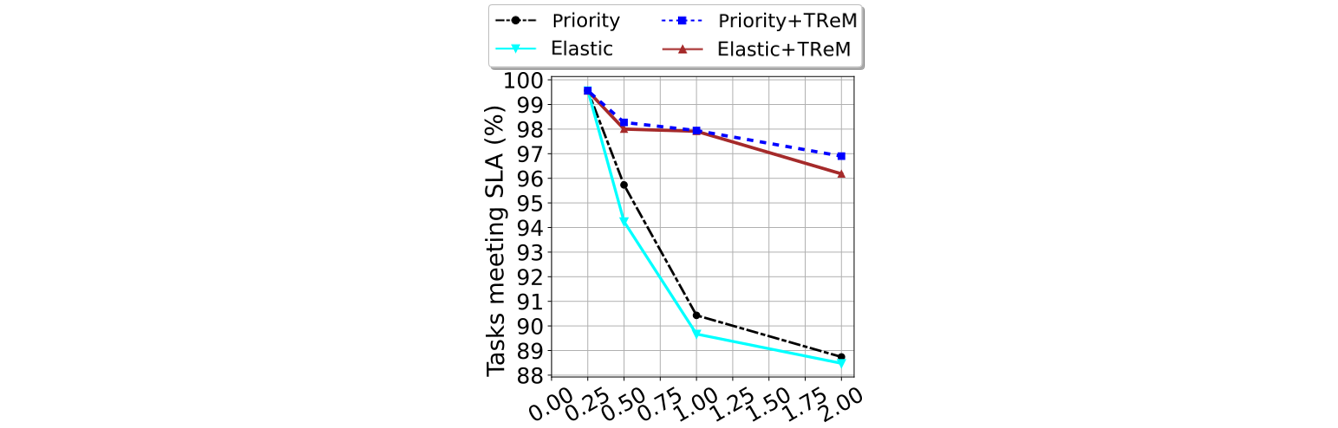

We use datacenter inspired workloads to evaluate how TReM reduces SLA violations. Figure 2 shows the percentage of user-facing tasks that meet their SLA (200ms) with increasing load. The x-axis is incoming load, from low (0.25) to high (1.0) and oversubscribed (2.0). A load of 0.25 suffices to fully utilize one GPU. A load of 1.0 is able to fully utilize all four GPUs. A load of 2.0 over-subscribes our system by 2x. As we see, at low load 99% of the tasks meet their SLA, irrespectively of the policy used (Priority, Elastic). Things become more intricate as the load increases. Without TreM, Priority and Elastic meet the SLA for 90% of the tasks at load 1.0, whereas, using TReM, we achieve 98% at load 1.0 and 96% at load 2.0. Work that needs to re-execute due to revocations is only 3%. Finally, TReM provides bounded revocation latency, 22ms in our platform, and adds zero overhead to task execution due to dynamic parallelism.

Figure2: Percentage of tasks that meet their SLA (y-axis) at increasing GPU load (x-axis) for different scheduling policies.

References

[1] Q. Chen, H. Yang, J. Mars, and L. Tang, “Baymax: Qos awareness and increased utilization for non-preemptive accelerators in warehouse scale computers,” in Proceedings of the Twenty-First International Conference on Architectural Support for Programming Languages and Operating Systems, ser. ASPLOS ’16. New York, NY, USA: ACM, 2016, pp. 681–696. [Online]. Available: http://doi.acm.org/10.1145/2872362.2872368

[2] J. Luitjens, “Cuda streams: Best practices and common pitfalls,” in GPU Techonology Conference, 2015.

[3] NVIDIA, “Whitepaper pascal compute preemption,” https://images. nvidia.com/content/pdf/tesla/whitepaper/pascal-architecture-whitepaper.pdf, 2016, online; accessed 25 July 2016.

[4] B. Wu, X. Liu, X. Zhou, and C. Jiang, “Flep: Enabling flexible and efficient preemption on gpus,” in Proceedings of the Twenty-Second International Conference on Architectural Support for Programming Languages and Operating Systems, ser. ASPLOS ’17. New York, NY, USA: ACM, 2017, pp. 483–496. [Online]. Available: http: //doi.acm.org/10.1145/3037697.3037742

[5] H. Zhou, G. Tong, and C. Liu, “Gpes: A preemptive execution system for gpgpu computing,” in 21st IEEE Real-Time and Embedded Technology and Applications Symposium. IEEE, 2015, pp. 87–97.